Feeling the heat

We’ve all felt the ramifications of climate change this year, from heat waves and wildfires to downpours and flooding, 2023 has given us a taste of what to expect over the coming decades. In short, it’s not good news. Without significant reductions in greenhouse gases, in tandem with accelerating deployment of long-term carbon removals —global surface temperatures will exceed the 1.5-2°C threshold set in the 2015 Paris climate agreement. Starting global emission reductions a few years back could have rendered these technologies unnecessary. Unfortunately, at this point, it is already too late, and the urgency has surpassed the point of no return.

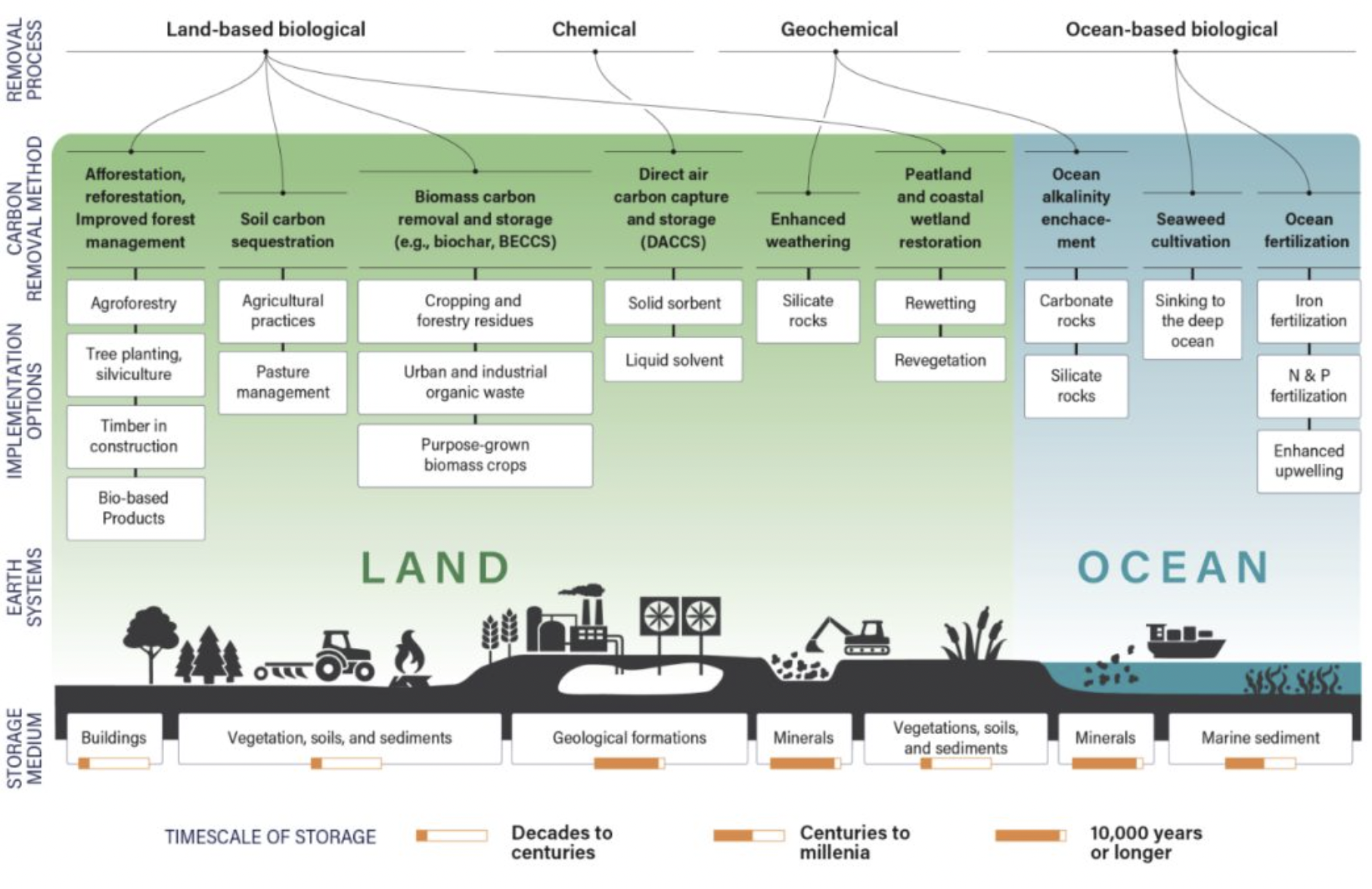

Accelerating the scaling of abiotic carbon dioxide removal (CDR) methods, i.e. the chemical, geochemical, and engineered methods of CDR, is key to climate change mitigation, to ensure that sufficient removals are deployed in 2050 and beyond.

What are Abiotic NETs?

Fundamentally, abiotic negative emissions technologies (NETs) exploit CO2 chemistry. These methods accelerate natural abiotic carbon processes by enhancing the kinetics of CO2 mineralisation forming carbonated rocks; increasing the ocean’s capacity to sequester CO2 through alkalinity enhancement; or hybrid chemical and engineering processes which separate and concentrate ultra-dilute CO2 from ambient sources, capturing it via chemisorption or physisorption for storage in deep underground formations, or alternatively, for conversion into useful, valuable materials. These are generally deemed higher quality removal pathways due to their permanence, durability, and ease of CO2 quantification compared with biotic and nature-based solutions; but are currently prohibited by high costs.

The Challenge Ahead:

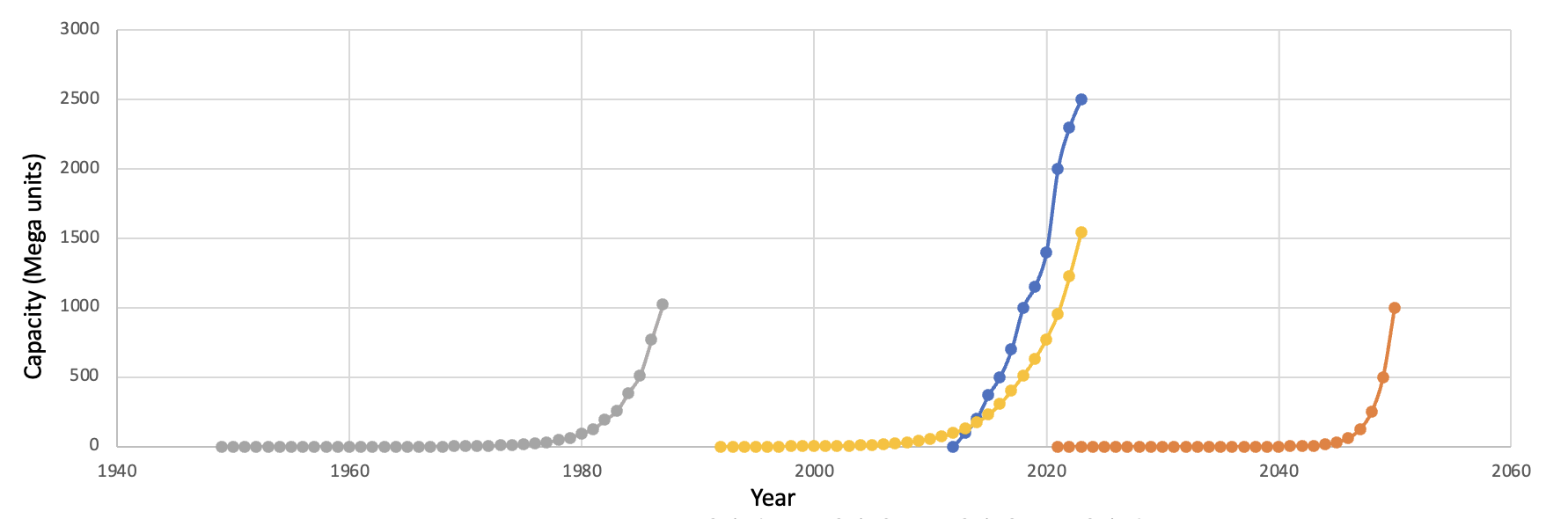

It’s hard to believe the nascency of the CDR industry given the importance these technologies will play in our path to net-zero GHG emissions. The largest players, such as Climeworks, Carbon Engineering, and CarbFix entered the picture just 12 years ago. Historically, key industries have typically taken centuries to optimise, scale, and establish a fully functional supply chain. Manufactured plastic, for example, was first patented in 1862, however, the industry only optimised the recipe half a century later with the invention of synthetic plastic–a combination of two chemicals (formaldehyde and phenol) under heat and pressure. It’s now a fully scaled and commercialised industry, commonplace in our lives, yet the annual production capacity of plastics is a mere 0.5Gton –in comparison to the 1 Gt and 10 Gton by 2030 and 2050, respectively, required as a conservative estimate for CDR. Only the most ubiquitous building material, concrete, is produced on similar levels as our CDR targets; which although discovered in Syria in 6500 BC, only began mass manufacture in the early 20th century with the invention of Portland cement.

Over recent years, software tech companies have managed to surpass these scaling rates; in the case of Instagram gaining over 100 million new users in their first year. A range of new software technologies have followed, including the crypto boom associated with Web3 and blockchain and a relatively new wave of generative AI technologies. These technologies have not been without fault. Often built on a foundation of “move fast and break things”, the transformational potential of these technologies has often overlooked the significant social, ethical, and environmental concerns that have accompanied them. Climate technologies must pursue equivalent rates of scale while minimising the potential negative externalities and co-hazards which may arise.

Hardware companies scaling at these rates is a difficult challenge, but there is precedent. The most well-known is arguably Moore's law, documenting that the capacity of transistors on microprocessors doubles every 2 years. More recently, Swanson's law observes that for every doubling of cumulative production volume, the price of solar photovoltaic (PV) modules declines by 20%, leading to a 30% increase in cumulative installed capacity. Both are grounded in lessons from the lesser-known Wright's law, which documented the cost of aeroplanes. While Moore's law describes technological change as a function of time, and Swanson’s a reflection of experience, Wright's law combines innovation and economies of scale in a “we learn by doing” approach to improve efficiencies, specifically, learnings which provide efficiency gains that follow significant investment.

In terms of investment and cost, is affordability required to promote demand or will demand drive down costs to increase affordability? In 1962, the first year that semiconductors shipped, the US government purchased every single one of them. In fact, from 1955 to 1977, government procurement accounted for 38% of all semiconductors produced in the US. This supported the semiconductor industry in its infancy as a first and major customer and created a demand environment in which companies had incentives to advance the state of the art. These advancements, alongside rapid progress following Wright’s law, contributed to the rapid cost decline from $32 for a single chip in 1961, to $1.25 just a decade later. The US may no longer be in the midst of a space or arms race, however, a world war in carbon removal against the enemy that is climate change is surely a more important war to wage.

Our Opportunities in CDR:

It’s clear that the challenge ahead is significant, both in terms of size and the limited time with which we have to achieve it. But it is doable. We have identified 3 key opportunities in the abiotic NET value chain, which are critical for the scaling CDR to reach Gton removal capacity.

1. Enabling Carbon Capture Processes

To make a significant dent in capturing carbon dioxide, direct air capture (DAC) must be scaled up fast. The IEA estimates that an average of 32 large-scale plants, each capable of capturing 1 Mton of CO2 per year, need to be built annually between now and 2050. Total annual capacity will have to, at least, hit a Gton by then, roughly 100,000 times more than it is today, meaning an average 50% increase in capacity annually.

The DAC unit itself comprises a sorption material, a contactor unit exposing ambient air to the sorbent, and a regeneration process. DAC plants mainly consist of a DAC unit, which interacts with the existing energy supply system, the material, component, and equipment supply chain, and the CO2 transport and storage infrastructure. It requires the optimisation of variables governed by chemistry to increase carbon capture efficiency, with developments of advanced nanomaterials (metal-organic frameworks, zeolites) or sustainable inorganic solvents (metal hydroxide, amines); variables governed by physics driving airflow through a contactor; and variables governed by a hybrid of both driving CO2 recovery (i.e., the process that drives the endothermic release of CO2 from the material it is bound to), normally a temperature-driven process using thermal energy to break the molecular or valence interactions; but recent advances include moisture-driven (ion exchange membrane), pressure-driven process (membrane as a sieve), or voltage-driven processes (electrochemical separation).

Energy requirements (and the associated costs) are commonly touted as the main barrier to scaling. Take Carbon Engineering's technology (solvent-based DAC with high-temperature regeneration step) requires ~2500 TWh for 1 Gton.yr, comparable to Climework’s (solid-based DAC with a low-temperature temperature vacuum swing adsorption (TVSA) regeneration step); equivalent to the amount of current renewable energy production. Obviously, it’s unreasonable to allocate 100% of the annual renewable production to DAC given the fierce competition in resources. Renewables are expected to grow by 13% per year and more than double by 2030. Indeed, there is no shortage of solar energy and vast quantities of untapped geothermal energy which must be scaled in tandem. In the meantime, using low-cost methane can feasibly scale DAC with only a ~17% reduction in cost per ton. In addition, next-gen DAC companies, such as Verdox, claim that their patented electrochemical reduction of a solid quinone electrode for CO2 adsorption (coined electro-swing approach) results in up to 70% less energy use than conventional methods by avoiding energy losses from heating and cooling. Similarly, Mission Zero, one of our own portfolio companies, offers a solution which uses an electrodialysis cell and a liquid sorbent to drive the CO2 regeneration process through an ion-exchange membrane at similar energy reductions. DAC innovation so far has targeted the endothermic regeneration phase but combining with a similar effort on the contactor side over the coming years will reap similar energetic rewards.

Sorption material and component supply chain possesses theoretical resilience and has relatively strong foundations. Global chemical production is well-distributed globally, with BASF (Germany), the Dow Chemical Company (USA), and Sinopec (China) among the three largest chemical producers which could feasibly expand into mass MOF or MEA-based sorbent production. However, currently there’s a lack of fit-for-purpose equipment (air contactors, calciners, fans/blowers, CO2 compressors and energy equipment, such as heat pumps, heat exchangers, or energy storage layouts). Hypothetically, companies such as Siemens could mass manufacture the equipment side of things from a skills perspective. These countries also represent developed industrial hubs from where strong regionalised, rather than globalised, DAC supply chains could be established, alongside dedicated DAC module manufacturing to initially serve the European, Americas, and Asia-Pacific markets.

Given the youth of DAC technology, large-scale production is limited by lack of technology validation at large-scale (post-TRL 6). Consequently, there’s plenty of room for process optimisation improvements through exploring the synergies between material and process development and the exploration of process potential e.g., optimising cycle steps and sequences. It might be possible to increase DAC's efficiencies to the Gton level by 2050 through large-scale production, sophisticated process design and engineering, and ongoing research and development. However, this growth rate must be higher than that of single-component semiconductors and PVs. Since DAC systems consist of several components, efficiency may rise more quickly if they are optimised simultaneously.

2. Enabling Carbon Transport and In-Situ Storage

Focus over the past decade has predominantly been on carbon capture technologies, however, a gap is emerging between the anticipated demand for CO2 storage and the pace of development of storage facilities. Today, over 10 MtCO2/yr of captured CO2 is injected for dedicated storage at commercial-scale sites, but this capacity is set to increase to around 0.1–0.5 GtCO2/yr by 2030 (ambient and point source). Despite this, major DAC player Global Thermostat currently vents all CO2 captured during its pilot phase and many others are on the search for adequate sequestration partners. Moreover, safe and reliable transportation of CO2 from capture to storage facility remains an open question and is a function of the quantity, composition, and distance over which it’s transported.

High-level geological analysis suggests that the world has ample CO2 storage capacity. Using geospatial data on sedimentary thickness and other parameters, total global storage capacity has been estimated at between 8 000 Gt and 55 000 Gt. Major constraints around the development stem from high Capex, negative public perception, and lack of publicly available data (although, it does exist but is guarded by Oil & Gas giants). Whilst problems do exist, it’s worth mentioning that the O&G industry has honed the fundamentals of this technology over several decades. In fact, after the Norwegian government levied a carbon tax on fossil fuels and expanded the tax to cover other sources that produce CO2, StatOil (now Equinor) began capturing CO2 emissions from its natural-gas processing and pumping them into empty gas fields. Years ago, oil companies used similar technology to pump CO2 into mature oil fields and, ironically, it was used to eke out any remaining oil through the process of “enhanced oil recovery.” But Statoil’s CO2 Sleipner gas field storage project was the first effort to pump CO2 underground for no other reason than to ensure it doesn’t end up in the atmosphere, and all it took were tax incentives.

Recently, studies into the safety concerns and public perception found that if careful due diligence in site selection, management, and monitoring is conducted the risk associated with underground carbon storage is minimal–offshore baseline scenario is expected to experience the smallest amount of leakage (0.53% of total stored CO2), while a poorly-regulated onshore scenario is expected to have the largest (11.3%) in 10,000 years. Given the quantity of CO2 currently emitted through industrial processes and during the testing and piloting of carbon capture methods, this level of leakage surely pales into insignificance. In fact, some companies (e.g., Carbfix and 44.01) aim to bypass the CO2 leakage issue via mineralisation with reactive rock formations, such as basalts, to form stable minerals deep underground; although these approaches require large amounts of water, high injection pressures, and geological formations only available in specific locations. This allays some of the concerns around in-situ CO2 storage.

There have been several recent government funding calls for CO2 hub developments (a cluster of facilities that share the same CO2 transportation and storage infrastructure) in Canada, Europe, and the United States. For example, the Project Cypress DAC Hub in Southwest Louisiana and South Texas DAC Hub have recently been announced which bring together multiple DAC companies within the same facility to access shared infrastructure (low-carbon energy source, material and component suppliers, and underground storage sites) so they can scale their technology faster and do it at a lower cost and transportation. In Europe, Sval, Storegga, and Neptune applied for a CO2 storage licence in the Norwegian North Sea–The Trudvang licence–which has the potential to store up to 225 million tonnes of CO2 by 2050. They have ambitions to develop further storage licences in the North Sea with the aim to build a common, pipeline-based infrastructure that can contribute to substantial cost reductions for CCS value chains. Private company, Deep Sky, a CDR project development company aggregating the most promising direct air and ocean carbon capture companies under one roof to develop Canada into a world-leading hub for carbon removal.

3. Enabling Carbon Conversion and Ex-situ Storage

There are storage possibilities other than geological storage, but to tap into them you would need to convert the CO2 to increase permanence. This includes storage in the anthroposphere e.g., in buildings, agricultural soil, or in circular material chains; known as carbon utilisation. However, it is important to mention that many carbon utilisation pathways do not always offer permanent storage; instead, they often cycle CO2 back into the atmosphere on short timescales. Therefore, not all fall under “negative emissions”. Thus, alternative uses and markets for captured CO2 will likely be necessary. This includes making materials, polymers, and chemicals from CO2 which are currently made with fossil-derived carbon and finding new uses for CO2 as construction materials, as has been demonstrated for cement and concrete manufacturing. Carbon Cure has developed an approach which takes CO2 and concrete ingredients to mineralise it in concrete, with an increase in tensile strength, reducing the need for energy-intensive cement. Carbon Upcycling has developed a mechano-chemical reactor which combines CO2 with waste materials from different industries (steel, glass, coal-fired power, and plastic) to produce low-carbon concrete and plastics. Whilst Aether targets high-value small-volume diamonds through a methanation process combined with chemical vapour deposition as a profitable offset for DAC technologists.

Key technical constraints for CO2 conversion stems from its underlying chemistry. Carbon dioxide is a stable and inert molecule, composed of carbon (a group 4, first row element) meaning it has four outer electrons and a high electronegativity, which enables the formation of 4 strong covalent bonds (or two double bonds for CO2) with first and second row, main group elements. Most of these reactions require breaking of strong C–O bond(s), which is endothermic and non-spontaneous, indicating that energy must be supplied externally to overcome the high activation barrier for reaction. This explains why the majority of technologies today use mineralisation conversion pathways, where CO2 is converted into its anionic form, before mineralising to a solid compound with cations; avoiding full reduction processes which are prohibitively energetic.

This technical challenge is conventionally addressed through various forms of catalysis, which increase the reactivity of the molecule through electron donation or withdrawal into the molecular orbitals of CO2, which in turn decreases the HOMO-LUMO gap, lowering the energy for conversion. However, these strategies often suffer from low selectivity, meaning they produce broad mixtures of products complicating downstream processing, which requires meticulous optimisation of conditions which can compromise the yield. Another challenge is catalyst degradation overtime which affects process efficiency, as well as sourcing affordable and abundant critical minerals, such as Cu, Zn, Cr, Pt, Pd, Rh, and Au. Alternative methods of tackling the stability constraint, include using inherently unstable and reactive co-reactants (e.g., highly strained 3 membered rings: epoxides and cyclic carbons) which may render the reaction exothermic; shifting the chemical equilibrium by generation or removal of specific co-products; or adding energy to the reaction in the form of electricity or light, or via magnetically-enhanced technologies. It’s also essential to understand how the CO2-derived product pathway compares to conventional production pathways when looking for viable approaches to scale CO2 storage processes.

Time to start scaling

Carbon removal is placed firmly on the global map and it is here to stay. We’re going to need a portfolio of solutions, using different methods and lasting from decades to millennia. To put the challenge into perspective: we’re essentially tasked with building an entirely new industry from the ground up within 20 years. This will be one of the toughest feats of humanity and explains why we’re so passionate about making progress today.

We will need to combine advancements in efficiencies of every individual input and component to accelerate improvements in efficiencies of CDR methods, learning from experience, alongside significant investments and economies of scale to exceed growth rates of those described by Wright’s Law. To mitigate social, ethical, and environmental controversies observed in the software industry, rigorous life-cycle (LCA) and techno-economic assessments (TEA) must be performed, in combination with robust, science-based monitoring, reporting, and verifying (MRV). Transparent communication on mistakes and learnings will need to be relayed openly within the CDR community, in conjunction with informing the general public and integrating activity in the industry with public opinion. After all, there is no shortage of anthropogenic CO2 to remove; and therefore, the CDR industry is no zero-sum game. We will win or lose together.